#PYDATA

Snowflake offers different ways to access and call python from within their compute infrastructure. This post will show how to access python in user defined functions, via stored procedures and in snowpark. Read More

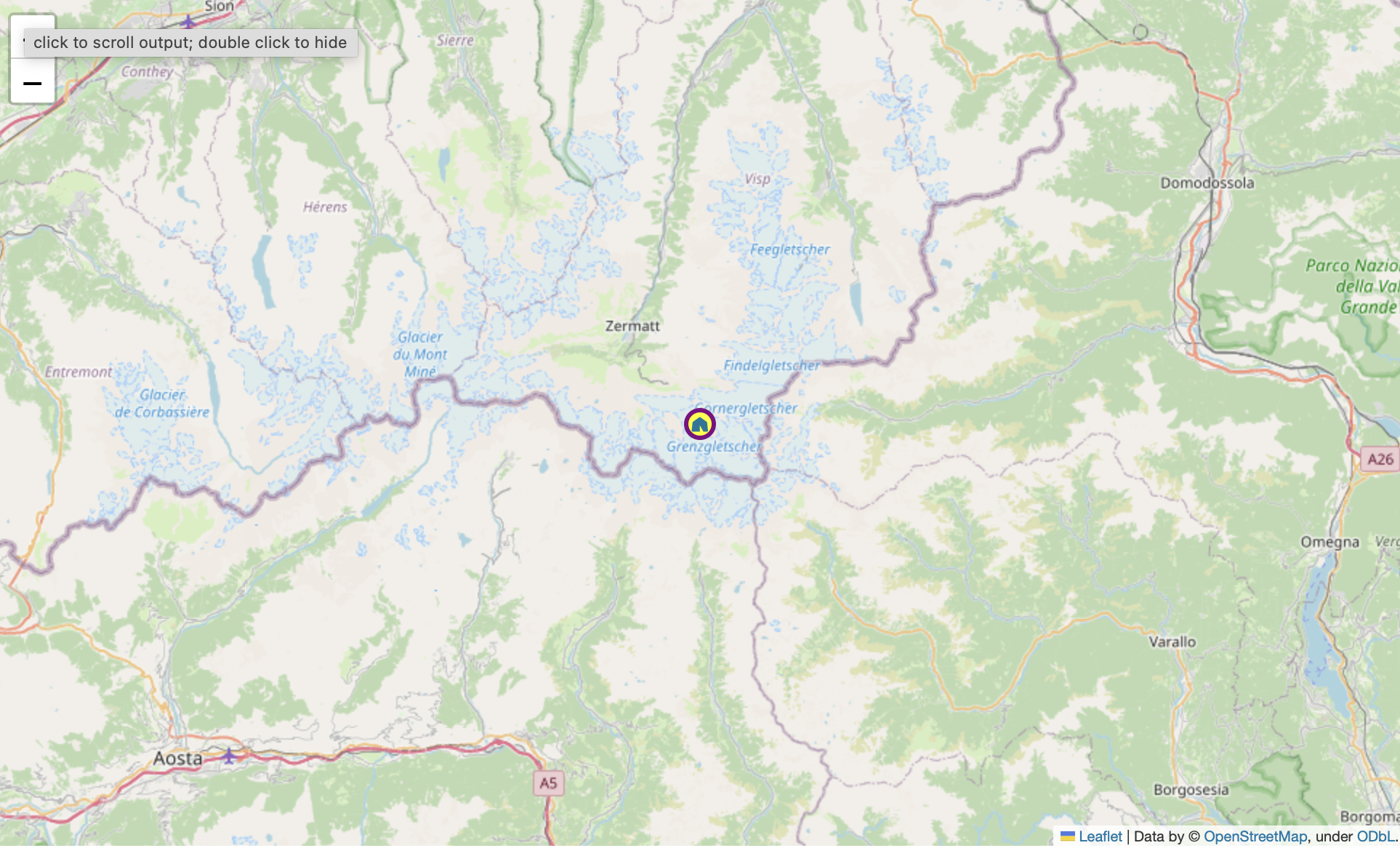

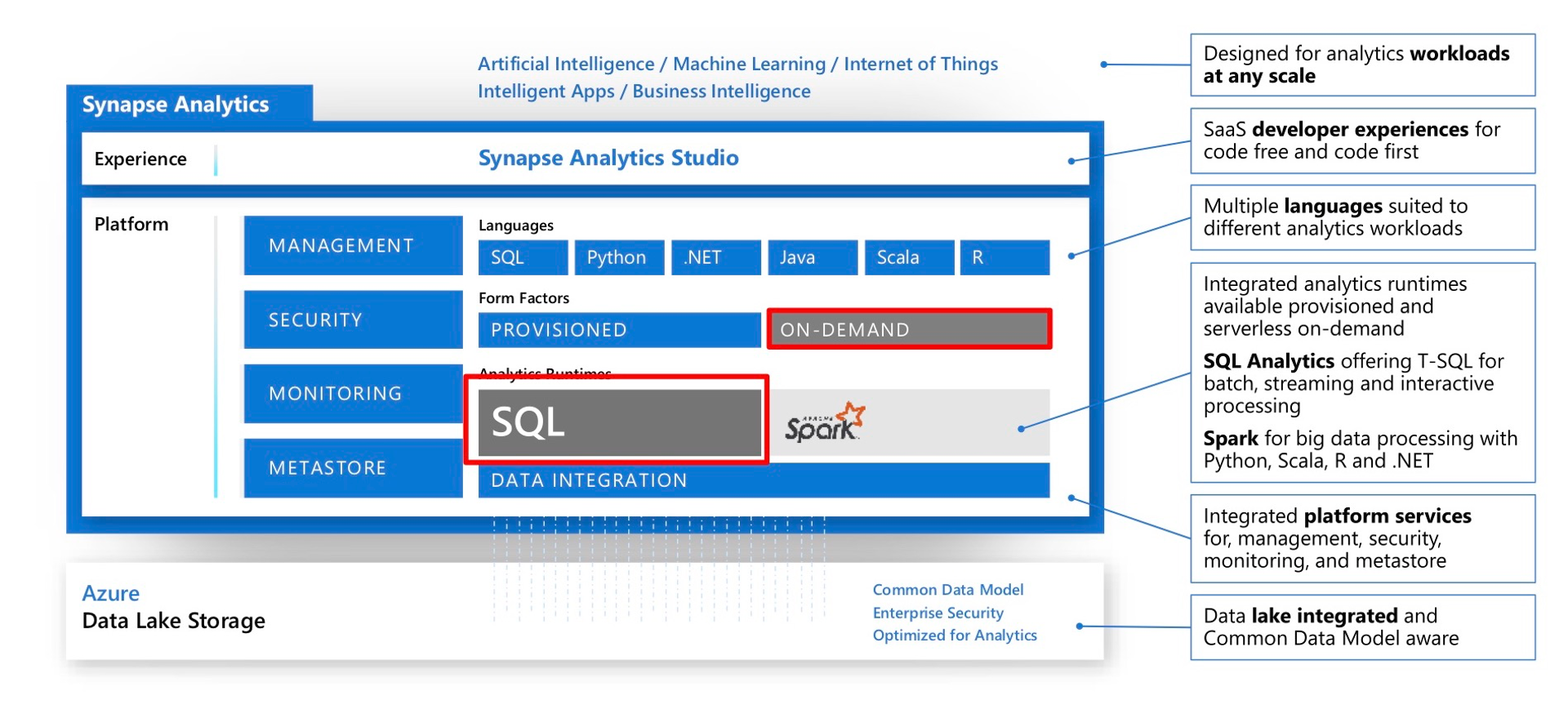

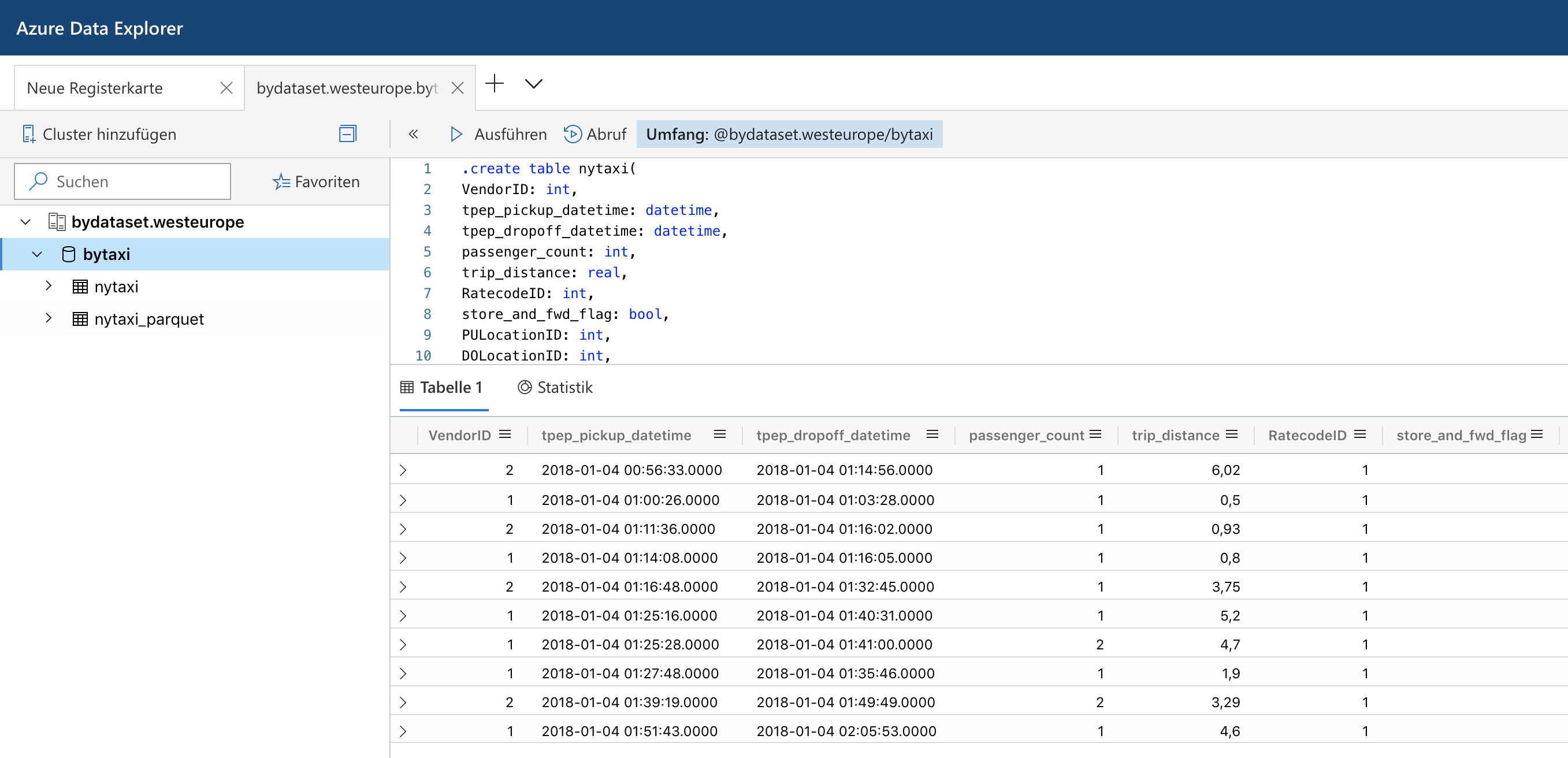

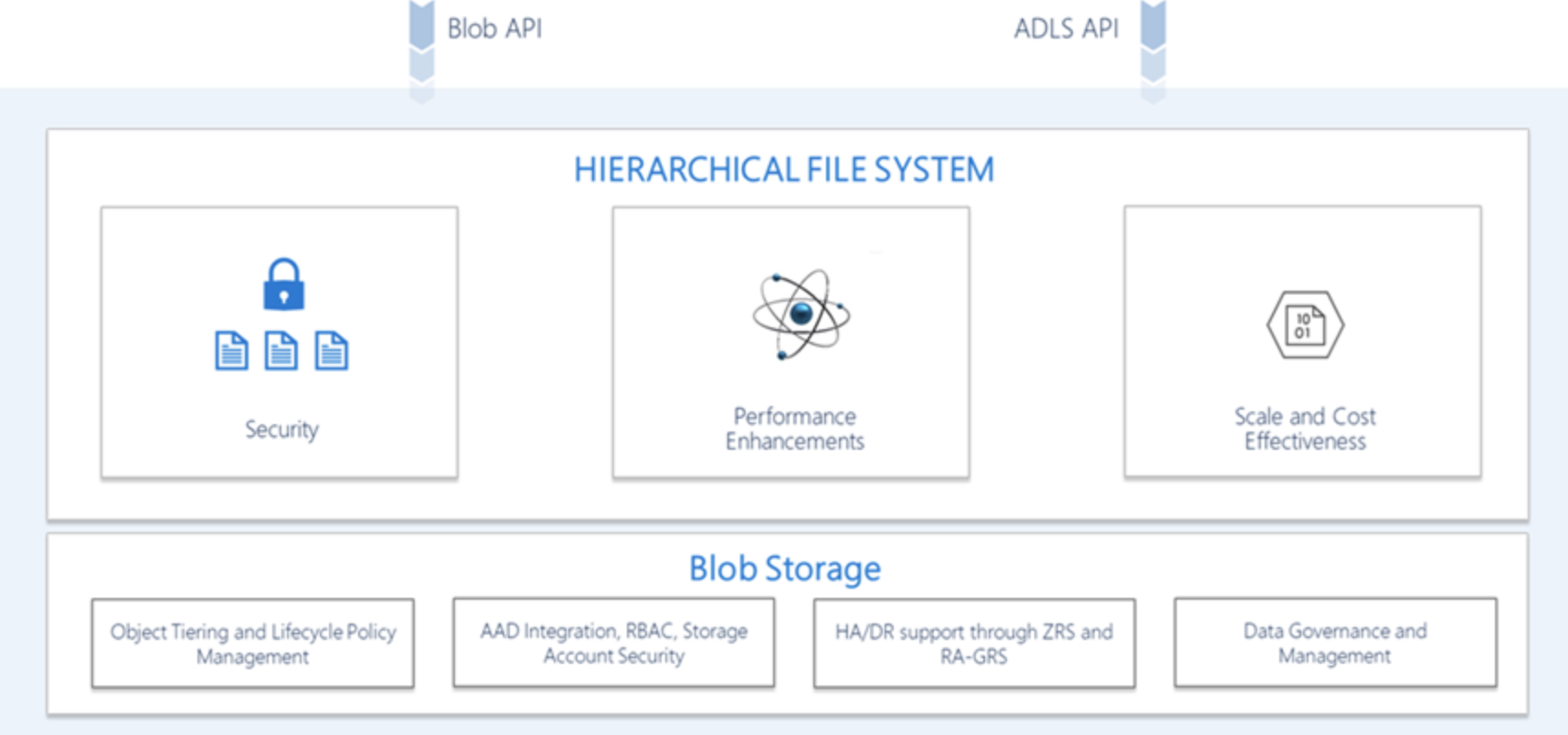

Last summer Microsoft has rebranded the Azure Kusto Query engine as Azure Data Explorer. While it does not support fully elastic scaling, it at least allows to scale up and out a cluster via an API or the Azure portal to adapt to different workloads. It also offers parquet support out of the box which made me spend some time to look into it. Read More

Apache Parquet is a columnar file format to work with gigabytes of data. Reading and writing parquet files is efficiently exposed to python with pyarrow. Additional statistics allow clients to use predicate pushdown to only read subsets of data to reduce I/O. Organizing data by column allows for better compression, as data is more homogeneous. Better compression also reduces the bandwidth required to read the input. Read More

Cloudera Kudu is a distributed storage engine for fast data analytics. The python api is in alpha stage but already usable. Read More